Ember

A prescriptive data catalog for scalable platforms

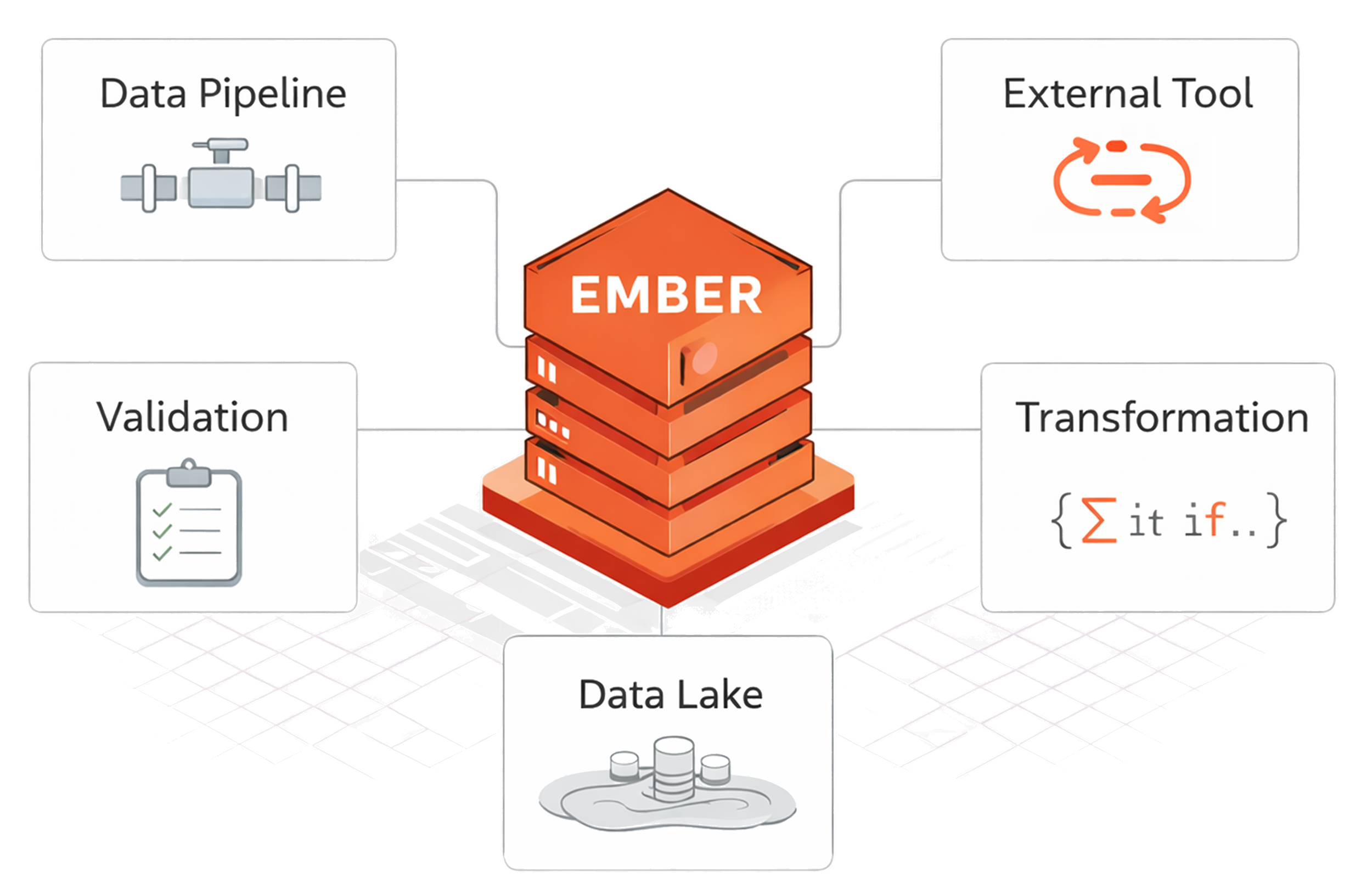

Ember defines how data is shaped, validated, and interpreted across the platform so logic remains consistent as pipeline complexity scales.

Ember is DataForge’s data catalog. It defines how data is shaped, validated, and interpreted across the platform rather than simply documenting what already exists.

Unlike traditional catalogs that observe data after the fact, Ember is prescriptive by design. It captures intent as declarative logic and applies it consistently across pipelines so meaning does not fragment as the platform grows.

At its core, Ember combines a structured knowledge model with reusable logic. This allows organizations to define data meaning once and apply it everywhere without duplicating transformations or embedding business rules deep inside pipeline code.

Catalogs That Observe Do Not Scale

Catalogs That Prescribe Do

Traditional data catalogs are built to document what already exists. They scan schemas, collect descriptions, and surface metadata after pipelines are deployed.

This works early. It breaks as pipelines multiply and logic diverges.

Ember was built for the opposite problem.

Traditional Catalogs

Observe data after pipelines are built

Document outcomes rather than intent

Track tables and assets, not logic

Drift as pipelines evolve independently

Require manual governance to stay accurate

Ember

Defines intent before pipelines run

Prescribes how data should behave

Encodes logic, validation, and meaning

Applies rules consistently across pipelines

Keeps meaning stable as complexity grows

Because Ember defines behavior rather than recording outcomes, it becomes part of how pipelines are built and operated, not a system consulted after the fact.

With Ember, the data platform has a true system of record.

An explicit source of truth for how data is defined and processed.

Where Definition Meets Execution

Ember does not stop at defining logic. It also captures how that logic executes in the real world.

Every declarative rule, transformation, and validation defined in Ember is linked to the pipelines that run it and the outcomes those pipelines produce. Execution details are not inferred later or stitched together from external systems. They are recorded alongside the logic itself.

This creates a living system of record that reflects both intent and reality.

What Ember captures

Declarative definitions for data shape, rules, and validation

How declarative logic executes within the single enforced pipeline architecture defined by Alloy

Execution metadata including timing, dependencies, and outcomes

Lineage that reflects actual transformations, not inferred guesses

Because definition and execution are stored together, Ember becomes the authoritative source for understanding how data behaves across the entire platform.

Understand Pipelines Without Reverse Engineering

In most data platforms, understanding pipeline behavior requires custom logging, external observability tools, and manual investigation.

In Ember, visibility is automatic and always accurate.

Because logic is declarative and execution is standardized, Ember captures operational detail as pipelines run. There is no need to instrument pipelines or reconstruct behavior from scattered logs.

What teams can see by default

Which rules and transformations ran

Where logic succeeded or failed

How long each step took

What downstream data was affected

This makes pipeline behavior understandable without reading code or tracing execution manually.

Why this matters

When teams can see what happened and why, they can debug faster, onboard new developers more easily, and make changes with confidence.

Operational clarity is not a feature. It is a consequence of a well-defined system.

Logic Has a Shape

Ember does not allow logic to be defined arbitrarily.

Every rule, transformation, and validation in Ember must conform to a small set of explicit, structured patterns. Logic is defined declaratively, attached to known entities, and scoped to specific stages of a fixed pipeline architecture.

Custom code is supported, but only in clearly defined extension points and only when necessary. It operates within the same structural boundaries rather than redefining how the pipeline works.

What this structure enforces

Logic must be defined declaratively, not embedded ad hoc in pipelines

Transformations attach to known entities and lifecycle stages

The unit of work is fixed and consistent across all pipelines

Custom code is allowed only in controlled, explicit locations

This constraint is intentional.

The result

Dependencies are always implicit

Orchestration is never manually defined

Pipeline design disappears as a concern

Complexity grows without multiplying workflows

Instead of every pipeline becoming its own orchestrated system, logic simply slots into a known structure.

Meaning remains consistent. Execution remains predictable.

This is a subtle advantage in small systems.

At scale, it is a fundamental shift.

Ember is not just a catalog that documents what happened.

It is a prescriptive definition layer that replaces pipeline design itself.

By fitting logic into a single enforced execution model, Ember removes the need to:

Design pipelines

Scope units of work, models, or packages

Define dependencies between steps

The architecture is predetermined.

Built for Automation at Scale

The same constraints that allow large engineering teams to scale without chaos are what make AI driven automation possible.

Ember works because it removes choice where choice creates fragmentation.

Logic has a fixed shape.

Execution follows a single model.

Definitions are explicit and shared.

This is what allows hundreds of engineers to contribute without creating hundreds of patterns.

Talos is DataForge’s AI control plane that translates natural language into Ember’s structured definitions.

Users describe what they want at a high level. Talos attempts to express that intent in Ember’s declarative structures. When something does not conform, Ember responds exactly as it would for a human developer by rejecting it.

There is no special path for AI. Ember is built to support both developers and AI from the ground-up.

LLMs are inherently non deterministic, which is unacceptable in enterprise data systems that drive high impact decisions. Ember enforces deterministic structures that make AI generated changes safe, reviewable, and repeatable.

Ember is the missing bridge between large language models and fully automated data pipelines.